- Ble medlem

- 24.08.2018

- Innlegg

- 4.819

- Antall liker

- 3.163

En metode, og som vi har brukt med godt hell er Retrieval-Augmented Generation(RAG) sammen med språkmodellen da kan du kontekstualisere og bruke egne data som kilde til output.

Ja, som sagt, prøvd her også, men ikke veldig imponert over resultatet.En metode, og som vi har brukt med godt hell er Retrieval-Augmented Generation(RAG) sammen med språkmodellen da kan du kontekstualisere og bruke egne data som kilde til output.

The use of RAG does not completely eliminate the general challenges faced by LLMs, including hallucination.

en.m.wikipedia.org

en.m.wikipedia.org

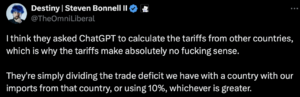

For example, a chatbot powered by large language models(LLMs), like ChatGPT, may embed plausible-sounding random falsehoods within its generated content. Researchers have recognized this issue, and by 2023, analysts estimated that chatbots hallucinate as much as 27% of the time,[8] with factual errors present in 46% of generated texts.[9] Detecting and mitigating these hallucinations pose significant challenges for practical deployment and reliability of LLMs in real-world scenarios.[10][8][9]

Link videre:The hallucination phenomenon is still not completely understood. Researchers have also proposed that hallucinations are inevitable and are an innate limitation of large language models.[73] Therefore, there is still ongoing research to try to mitigate its occurrence.[74] Particularly, it was shown that language models not only hallucinate but also amplify hallucinations, even for those which were designed to alleviate this issue.[75]

Ji et al.[76] divide common mitigation method into two categories: data-related methods and modeling and inference methods. Data-related methods include building a faithful dataset, cleaning data automatically and information augmentation by augmenting the inputs with external information. Model and inference methods include changes in the architecture (either modifying the encoder, attention or the decoder in various ways), changes in the training process, such as using reinforcement learning, along with post-processing methods that can correct hallucinations in the output.

Researchers have proposed a variety of mitigation measures, including getting different chatbots to debate one another until they reach consensus on an answer.[77] Another approach proposes to actively validate the correctness corresponding to the low-confidence generation of the model using web search results. They have shown that a generated sentence is hallucinated more often when the model has already hallucinated in its previously generated sentences for the input, and they are instructing the model to create a validation question checking the correctness of the information about the selected concept using Bingsearch API.[78] An extra layer of logic-based rules was proposed for the web search mitigation method, by utilizing different ranks of web pages as a knowledge base, which differ in hierarchy.[79] When there are no external data sources available to validate LLM-generated responses (or the responses are already based on external data as in RAG), model uncertainty estimation techniques from machine learning may be applied to detect hallucinations.[80]

spectrum.ieee.org

spectrum.ieee.org

spectrum.ieee.org

spectrum.ieee.org

spectrum.ieee.org

spectrum.ieee.org

Nordmann klager ChatGPT til datatilsynet

Han krever et oppgjør med ChatGPT og får støtte til klagen.www.nrk.no

arstechnica.com

arstechnica.com

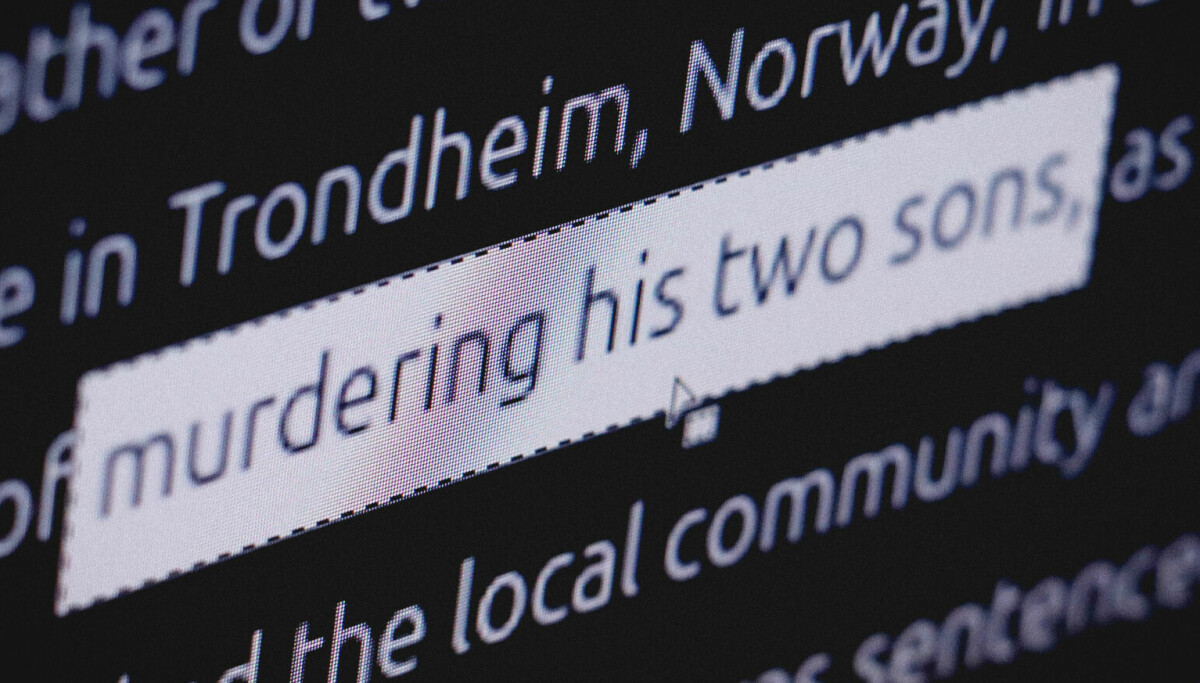

ChatGPT mente Arve drepte sønnene sine – klager inn saken

– Det faktum at noen kan lese dette og tro det er sant, er det som skremmer meg mest, sier Arve Hjalmar Holmen, som nå får hjelp av Noyb til å klage inn OpenAI.www.kode24.no

Det er fint lite OpenAI kan gjøre for å «slette det ærekrenkende innholdet og justere modellen slik at den ikke genererer uriktige resultater». Den er en bullshitgenerator, og f..n vet hvilke assosiasjoner den koblet sammen for å gi en plausibelt utseende setning. Den har ikke noe forhold til om noe er sant eller ikke.

Dad demands OpenAI delete ChatGPT’s false claim that he murdered his kids

Blocking outputs isn’t enough; dad wants OpenAI to delete the false information.arstechnica.com

Det kan bli interessant juss om dette dekkes av GDPR eller ikke.

Det er like nødvendig at han får sparken som at kunnskapsministre med tilfuskede mastergrader fikk fyken. Man kan virkelig ikke akseptere sånt. Dette er ikke en kommafeil i en bisetning, men helt bevisst forfalskning av et "kunnskapsgrunnlag" for en vesentlig beslutning. Det må få konsekvenser.Prosjektlederen har nok ikke tenkt å gå før vinden snur kraftig. Dette er bare en bagatell. Fra lokalavisa der oppe (iTromsø? kilde ikke oppgitt der jeg fant bildet)

Implikasjonene av denne saken er enorm. I Tromsø leter man nå gjennom flere utredninger med sikte på å finne tilsvarendeDet er like nødvendig at han får sparken som at kunnskapsministre med tilfuskede mastergrader fikk fyken. Man kan virkelig ikke akseptere sånt. Dette er ikke en kommafeil i en bisetning, men helt bevisst forfalskning av et "kunnskapsgrunnlag" for en vesentlig beslutning. Det må få konsekvenser.

Jeg rev verbalt hodet av en ung & lovende som forsøkte å selge oss noe AI-greier i går. Bullshit-filteret mitt gikk fullt og rant over. Tror ikke han hadde en god dag på jobb, dessverre.Og husk på: de unge er trent på innsalg av egen fortreffelighet og håndtering av kommunikasjon

Problemet er at det KI kastar i trynet på oss er måten vi sjølv bruker tekst.I Tromsø leter man nå gjennom flere utredninger med sikte på å finne tilsvarende

no.linkedin.com

no.linkedin.com

Her vart KI brukt på verst mogleg måte, for å laga ei (falsk) fagleg utgreiing for å støtta opp under eit politisk vedtak som truleg var upopulært, men kanskje naudsynt. Altså, for å produsera eit administrativt slagvåpen: ein rapport spekka med referansar til forsking.Hva med klare retningslinjer for hva offentlig sektor faktisk IKKE bør gjøre med KI?

spectrum.ieee.org

spectrum.ieee.org